中文是完整的。

另外,大的1,2.mdd都可以用老版本的,新增图像相对来说很少。99.99999%都是一样的图像。

这些老版本指的是,这里的哪一个文件夹里的文件?

谢谢!

记不得了,你浏览一下,体积应该是10GB以上,100GB以下。

英文版就2个文件夹,里面都没有那么大的。

那就没有了,或者太大了没法上传。网速太慢了。

中文版有大的mdd,它们不通用吧?

肯定通用,每个月的图像数据改动都是微乎其微的吧

那“ZH20230901”有离线31个4GBmdd,也就是约124GB,这个可以用吧?

谢谢!

应该可以的。

最新1.20的数据有9个g,是我观察以来最大的一次,大神有兴趣的话可以试试,看缺多少

是大了点,不过字典残缺的内容补进去应该至少有11个G。差的不是一点半点。

已经全程自动化处理(从数据下载到转成mdx)不过每步速度都很慢。

@meandmyhomies Do you know if the recent HTML dumps have resolved the problem of missing content?

I downloaded latest English Wikipedia dumps. The missing content appears to be limited to the English Dictionary rather than Wikipedia dumps. The latter has excess “content” in the form of previous versions of the same article (dozens of nearly duplicate pages on the same headword or article) although it is possible that Wikipedia dumps suffer from the same missing content problem as well.

Nobody has pointed out a missing headword from the Wikipedia dumps yet, this is an unknown at this time to me. I still need to parse the data to know if the excess version problem is fixed.

I haven’t downloaded the dictionary dump to know if the missing content problem is fixed in their dictionary dumps yet. Not actively working on mdict stuff lately.

Thank you very much for your elaboration.

感谢大神!!这个工程量辛苦了!请问大神会不会制作mobi格式的词典?我用kindlegen转换mdx格式的词典一直报错,好崩溃。如果能出一个教程,实在感激不尽!!

mobi 转epub 就是html,也就基本上是mdx原料了(网站不就是html么)。

更新了最新内容 2025-10-11. 不用停止运行的buggy html dumps。

一共84836xx 词头,比较完整。 图像和离线语音在路上。

目前只有百度链接,体积太大了,放不进freemdict

等待离线语音和图像

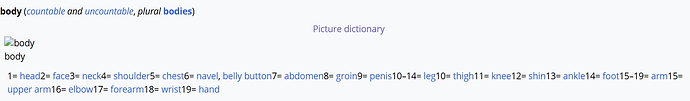

不是漏掉了,是序号